Along with the constant evolution of digital marketing strategies and practices, there’s been a growing focus on technical SEO. In 2024, site speed, crawling, and indexing emerged as issues that are now central to website owners who want to rank well on search engines. But what is technical SEO, and how do you leverage it to enhance your site?

What is Technical SEO?

Technical SEO is the practice that involves modifying a website to improve its content crawling and indexing by search engines such as Google. This differs from on-page SEO, which works on the content, and off-page, which works on backlinks. Still, technical SEO focuses on the internal aspects that make a website functional, practical, and user-friendly.

Why is Technical SEO Critical for Website Performance in 2024?

Considering Google’s endless updates and breakthroughs of new search algorithms, technical SEO has positioned itself as a deciding factor in whether your site will be successful or go into the void. If your website could be more active, more accessible to crawl, and better indexed, expect more success in your search engine ranking. Knowing and implementing the proper technical SEO is very important to be on top.

Why Site Speed Matters for SEO

Google’s Focus on Core Web Vitals

In 2024, Google’s algorithm will emphasize site speed more than Web Vitals. These are metrimetrics, which measure the efficacy of the site’s efficiency. They load slowly and make the visitors visit, and they are also subject to rank penalties.

User Experience and Conversion Rates

A fast site equally translates to a happy user and customer experience. Indeed, research shows a high probability of users bouncing whenever a site is slow, directly affecting conversion rates and harmful faction levels. Hence, negative. Hence, when it is fast, it helps increase your search engine ranking and your overall profit metrics for Measuring Site Speed.

Largest Contentful Paint (LCP)

LCP determines the time required for the most significant content (simple images or blocks of text) to be displayed on the screen. To adhere to the expectations, Google’s objective should restrict the LCP to about 2.5 seconds or below.

First Input Delay (FID)

FID is focused on measuring the time it takes for your site to react to a user performing their first action on your site, such as pressing a button. A low FID means that there will not be interruptions while interacting with the site, which is vital for user experience.

Cumulative Layout Shift (CLS)

This metric describes the actual loading movement of the elements on the page—how stable is the layout of your page while it loads? OptimizatOptimizing the website’s CLS scoretial since a page that shifts suddenly may annoy users.

How to Improve Site Speed in 2024

Downsizing the Size of Images and Videos

Overlargelargend videos mainly cause this problem. Certainly, compressing without losing a lot of money will make your site quicker.

Employing Browser Caching

What’s great about this tool is that it enables repo serving, which saves parts of your site in users’ browsers so that it will load faster when they revisit your page. Even though this is bland, it is an excellent performance improvement.

Minimizing CSS, HTML, and JavaScript

This process is known as minification, which removes characters (spaces, comments, etc.) from your Chrome. This will optimize files and improve site speed.

Employing Content Delivery Networks (CDNs)

Using CDNs lets your website’s content be stored on and sent from multiple servers worldwide. When you see your site, it is routed through the nearest server, reducing the likelihood of delays and improving page loading speed.

Crawling and Indexing in 2024

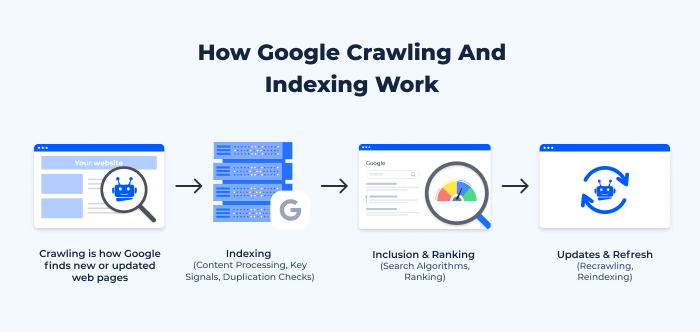

What is Crawling, and why is it important?

Crawling is a process by which spiders or search engines like Google scan your web pages. This is the first step to ensure that the content reaches the audience. If the site is not crawled, there will be no chance of indexing, which means the page will not appear in search engine queries.

Importance of Crawl Budget

The crawl budget is the number of pages that Googlebot will use for a specific time frame on a website. The more Ase expands, the more critical it becomes for this budget through prioritization to ensure essential pages are crawled while restricting pages of lower-value pages.

How do you become Indexed?

Indexing is how search engines crawl, store, and organize content understandably. Once indexed, search engines will see the site since the pages will appear in search results.

Differences Between Crawling and Indexing

Crawling is locating your content, while indexing ensures that it has been saved and will be displayed when any user searches for related content.

Optimizing Your Crawl Budget Prioritizing High-Value Pages Make sure that the pages you must have indexed are crawled first. This could include the homepage, certain vital product pages, or even crucial blog posts that fuel traffic and conversion rates.

Managing Duplicate Content

Duplicate content is twofold. It could be more valuable and convenient for search engines. Use canonical tags to inform Google which page is more important.

Using Robots.txt and Meta Tags Effectively

Robots.txt files are specialized files that give instructions about the site crawlers. There are meta tags such as “index,” which help instruct people not to enable certain pages on the site to be indexed. Ensuring Proper Indexing for SEO Success Google Search Console for Indexing Insights Google Search Console should be checked regularly for lag oral issues. This tool also maps out a perspective regarding the site’s performance, which is relevant to SEO tasks.

Structured Data for Enhanced Indexing

The need for structured data goes a long way toward supporting search engines’ indexing of the intended content. Providing rich snippets involves adding schema markup to the pages, making search results richer, and improving the click rate.

Advanced Crawling and Indexing Techniques

Utilizing Log File Analysis Log file analysis allows for observing how search engines are crawling the site. These statistics can be used to assist you in spotting inefficiencies and opportunities for self-improvement.

Implementing XML Sitemaps and Their Usage for SEO Success

A sitemap lets you discover all the requisite pages on your website. In this case, we are discussing an XML sitemap. Uploading your sitemap on Google is simple to ensure crawling.

Making Javascript Calls for SEO Crawling and Indexing

Websites that use a lot of JavaScript can become search engines that crawl. Ensure vital content is accessible with minimal JavaScript execution requirements and utilize server-side rendering techniques.

Final Thoughts

Technical SEO in 2024 will not be optional. Considering the crawl website load time and indexation, these elements aren’t a bonus. They are crucial for successfully running a high-quality website. What you should take from this article is that your website would be robust, uncompromised, and search engine optimized for all gently Asked Questions.

FAQ

How often must I check my website’s speed because of SEO?

The best period would be after a month, but if the site’s structure or design has been altered, then the speed can also be checked after such an event.

What is the recommended page loading time according to SEO?

Pages should load faster than three seconds for a site to perform optimally. Ideally, it should take two seconds at the maximum.

How do I resolve crawling problems in Google Search Console?

To begin with, review the errors noted in Google Search Console, then edit your robots.txt file, remove crawl constraints, or update your sitemap appropriately.

What will happen if my site has yet to be indexed?

If life is not indexed, it does not go up in search results so that potential visitors will notice your content.

Is technical SEO less relevant than on-page SEO?

Both are equally important. Technical SEO gives assurance and ensures the site’s comprehension by ensuring it is relevant to the audience.